Think about the last time you clicked “I agree” without reading the terms. Or when you trusted a company with your data only to discover it had been shared with third parties. We’ve all accepted opaque terms from faceless entities, with little choice but to trust they’ll handle our information responsibly.

This dynamic represents a fundamental challenge impacting everything from social media to government transparency. It’s a problem that Steven De Costa, founder of Link Digital and co-steward of CKAN the Comprehensive Knowledge Archive Network – has been thinking deeply about since 2018.

Flawed architecture of trust

We live in what Steven calls a “pull economy” – a system built on trust. Value and trust flow in one direction. Organizations request our trust and data while offering minimal transparency or accountability in return. This structure requires asymmetrical relationships where individuals must trust entities without adequate verification.

This leaves many feeling powerless, frustrated, and resigned to a system where we have no choice but to hope for the best. When Facebook changed its privacy settings in 2009, millions had their previously private information suddenly made public—a stark reminder of our vulnerability. The Cambridge Analytica scandal further demonstrated how easily our trust could be exploited, with data from 87 million users harvested without meaningful consent and used for political manipulation.

Trust is the language of mothers and robbers,

Steven explains, pointing out how the same request, “trust me”, can come from sources with wildly different intentions. We often can’t tell what these intentions are until it is too late.

This model becomes problematic at scale. In information networks, where data flows across countless nodes with minimal transparency, these trust relationships become less functional.

The Objective Observer Initiative

What if we could restructure these relationships? What if, instead of being pulled into trusting entities and platforms, we could push our intentions outward in measurable, accountable ways?

Steven began developing this vision in January 2021, during global uncertainty that made him question fundamental assumptions about information systems.

I started to post on LinkedIn, test ideas with people, and challenge myself about how I would respond to these threats,

he explains. What began as a response to shifting perceptions around openness evolved into a framework for reimagining how data, intent, and accountability could work together.

Imagine if you could declare exactly how you want companies to use your data, with systems that automatically verify compliance. Instead of clicking “agree” on terms you’ll never read, you could publish your own—I consent to my location being used for navigation only or My purchase history may be analyzed for recommendations but not shared—and only interact with organizations that honor those boundaries.

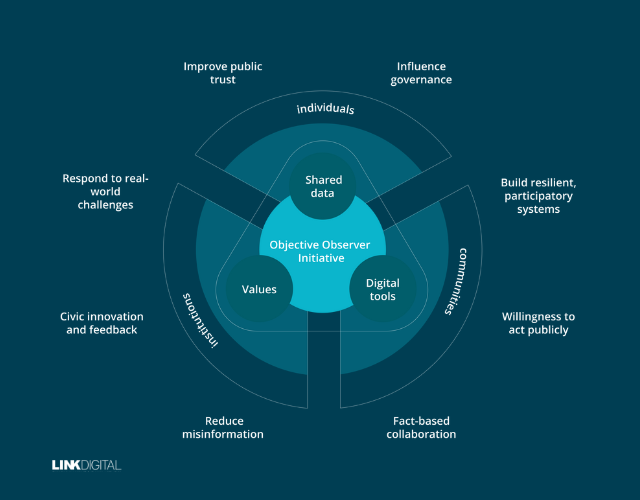

This is the vision behind the “Objective Observer Initiative“, a framework for creating a “push economy” based on honest declarations of intent rather than blind trust.

The initiative consists of three components:

- The observer (OpenData.ai) functions as an independent verification system, collecting and analyzing evidence of whether actions match declared intentions. This creates ongoing accountability rather than one-time consent.

- The social experience (OpenData.ly) serves as a public registry where individuals and organizations publish their commitments, creating a permanent, searchable record of what entities claim they will do.

- An open source community component enables collaborative development, ensuring the system itself remains transparent and accountable to its users.

Consider applying for a job. Currently, a company posts a listing with promises about workplace culture, advancement, and compensation. Applicants must trust these claims, often discovering the reality only after investing significant time or accepting the position.

In Steven’s vision, the company would make explicit declarations that could be verified against objective measures. Is the company’s workforce as diverse as they claim it to be? Do they provide the work-life balance they advertise? The system would track these patterns, allowing potential employees to make informed decisions rather than relying on marketing claims.

This approach flips the traditional model. Instead of organizations asking us to trust them, individuals would state:

This is who I am. These are my boundary conditions. This is how I intend to act.

Those declarations become observable, measurable, and accountable.

“Anyone can be honest,” Steven points out. “Any single one of the eight billion people on this planet can have a declared intent, a declared persona, a declared set of actions they’re going to take.”

Applied to data systems, the Objective Observer Initiative transforms how we think about open data. Rather than a passive resource, data becomes active evidence of alignment with stated intentions. Did a government agency spend money as declared? Did a company uphold its stated values?

AI as the material for new structures

The Objective Observer Initiative requires sophisticated systems for monitoring intentions and actions. This is where AI becomes essential, not as a solution itself, but as the material from which solutions can be built.

Steven describes AI as like “wood” – a constructive framework that can be shaped into what we choose. The question isn’t what AI can do, but what we want to build with it.

Today, AI is deployed in ways that diminish human agency. Recommendation algorithms analyze our past behavior to predict what will keep us engaged, often leading us down increasingly narrow content paths. Credit scoring systems make life-altering decisions based on opaque criteria.

What excites Steven is the alternative. How AI can become “very close to the skin”, i.e.,technology that embraces human intention and enhances our ability to express it clearly. Rather than predicting and manipulating us based on past behavior, AI could help us articulate and follow through on our declared intentions.

We can have a clear signal about what we want and how we might get it with technology,

he says. This vision sees AI not as replacing human agency but as strengthening it – giving individuals more power to define who they are and what they want to be.

From CKAN to a new vision

Steven’s thinking didn’t emerge in a vacuum. As co-steward of CKAN, an open-source data management system used by governments worldwide, he has worked at the intersection of data, transparency, and public interest for more than a decade.

CKAN embodies many values underpinning the Objective Observer Initiative.

What CKAN is, is a tool… It’s owned by the contributors, and it’s owned by the users.

The system allows decentralized data publishing with multiple custodians, avoiding vendor lock-in while making data interoperable and discoverable.

This experience showed both the potential and limitations of current open data approaches. While CKAN creates infrastructure for publishing open data, the deeper challenge remains: generating demand for that data and connecting it to meaningful accountability systems.

The connection between CKAN and the Objective Observer Initiative represents an evolution—from making data available to making it actionable in service of declared intentions and public accountability.