As the climate crisis intensifies, the need for high-quality, accessible data has never been more urgent. Climate data portals serve as centralized repositories where climate-related information can be stored, organized, accessed, and shared across organizations and borders.

The role of climate data portals

Climate data portals have become essential digital infrastructure in a collective response to climate change.

I had a conversation with Lex du Plessis,the leader behind the implementation of several major open data portals. I’ve come to know him as someone who can clearly express complex thoughts without losing clarity – a rare quality when discussing open data matters that can quickly become abstract.

Climate data portals might seem like straightforward repositories of information, but after speaking with Lex, a Technical Delivery Manager at Link Digital who implements CKAN-based data portal solutions, it’s evident that they’re far more complex and nuanced than most people realize.

For data managers in government agencies and NGOs wrestling with how to build effective climate data infrastructure, here are the most valuable insights from someone who’s in the trenches daily.

Flexibility overcomes rigid solutions

The most important advantage of open-source platforms like CKAN for climate data is their flexibility. As Lex explained, many proprietary solutions work well for specific use cases but become incredibly expensive when you need to adapt them.

With CKAN, you don’t run into walls where suddenly your organization has to change how it operates to fit the platform’s constraints,

Lex noted.

This flexibility comes from CKAN’s community-driven nature. Since different organizations have wildly different requirements, the platform has evolved to accommodate various needs rather than forcing a one-size-fits-all approach. It’s the architecture that can be customized with extensions to meet specific customer needs.

Lex is a very passionate person, and this became evident when he described how Link Digital approaches client projects – focusing not just on technical specifications but also on delivering holistic solutions that genuinely solve their data management challenges.

The standards dilemma no one talks about

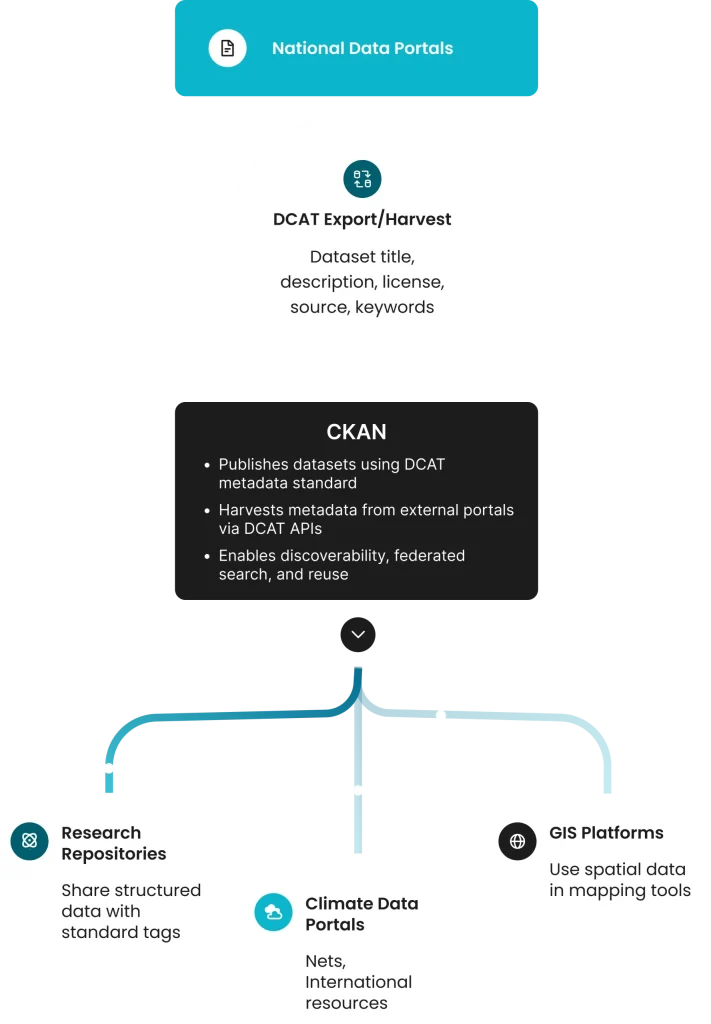

One of the fascinating aspects of the discussion centered on standards – the hidden framework of effective climate data sharing. When organizations don’t follow common standards, something as simple as harvesting data between portals becomes incredibly complex.

Lex gave a surprisingly clear example:

If you don’t know what a ‘house’ is, how can you compare houses? Is it a single room where you sleep? Is it a five-room mansion?

When climate data lacks standardization, you waste enormous resources just translating between systems.

Your definition for this metadata field versus my definition – are they the same? Can I say they are equal, or do I need to create a new metadata field to capture what you’re trying to communicate?

A real-world example of workflow standardization is the main Spanish data portal that captures data from provincial sources in a hierarchical relationship while also exchanging standardized information with the European data portal – creating a seamless flow of climate information across borders.

The perfect vs. good enough debate

Perhaps the most thought-provoking insight was about data quality versus data availability. Many organizations hesitate to publish climate data that doesn’t meet scientific standards. As it’s a constant trade off between volume of published data and it’s quality.

Lex described his father-in-law’s simple rain catcher in the garden. It’s not scientifically precise, but if everyone in the neighborhood used one, over time you’d get valuable rainfall patterns for the local area.

You could dismiss it as bad data because it wasn’t captured using specific tools,” Lex said. “But there’s still value in that data.

I found this analogy brilliant. It provides a clear mental model for the rather vague concept of data quality, especially when debating whether imperfect data should be published at all. It shifted my thinking from “is this data good enough?” to “how do we properly describe the limitations of this data so others can decide its usefulness?”

This raises an important question for climate data managers: Do they withhold imperfect data or publish it with appropriate metadata so users can decide its value? The CKAN community itself debates this, with some arguing the platform isn’t restrictive enough.

Lex leans toward publishing.

If you post it, it can be used. If you don’t post it, nobody knows it exists.

Measuring real-world impact

How do you know if your climate data portal is making a difference? Lex suggests several approaches:

- Use Digital Object Identifiers (DOIs) – permanent digital identifiers assigned to content, to track citations of your datasets.

- Monitor site visits and user engagement.Ensure your data is discoverable through services like Google Dataset Search.

Extensions that matter

When implementing a new portal, Lex relies heavily on community-developed extensions (plug-ins that add specific functionality) rather than reinventing features.

You could reinvent the wheel every time you need a tire, but that’s a lot of work.

Three extensions he finds particularly valuable:

- Charts extension for visualizing time-series climate data.Single sign-on for easier user management.

- Data Catalogue Vocabulary (DCAT) extension, which implements a standard vocabulary for describing datasets, enabling better interoperability.

Practical insights for climate data portal managers

After speaking with Lex and reviewing the best practices in data portal implementation, several key insights emerge for organizations managing or launching climate data portals:

- Prioritize interoperability from the start. Adopt widely-used standards like DCAT to ensure your data can communicate with other systems. This isn’t just a technical consideration,it’s fundamental to your data’s usefulness.

- Embrace the “publish proper metadata” philosophy. Rather than withholding imperfect data, focus on providing clear context about data limitations so users can make informed decisions.

- Leverage community-developed extensions. The open-source community has likely already solved many of your challenges. Use existing extensions before building custom solutions.

- Implement impact measurement tools early. Set up DOI (Digital Object Identifier) assignment, analytics, and discoverability features from the beginning to track how your data is being used.

Climate data portals aren’t just technical projects. They’re critical infrastructure for addressing one of humanity’s most pressing challenges. By following these principles, data managers can build systems that not only store information but also meaningfully contribute to climate action.

Link Digital builds climate data portals based on CKAN. Send a message today.